What is StableBeluga2?

Stable Beluga 2 is the latest innovation from Stability AI, designed as an auto-regressive language model fine-tuned on the extensive Llama2 70B architecture. With a robust foundation derived from advanced algorithms, this model aims to provide users with highly accurate and contextually aware text generation capabilities. Whether it's crafting poems, generating informative articles, or assisting in creative writing, Stable Beluga 2 promises unparalleled performance and adaptability.

What are the features of StableBeluga2?

- Advanced Language Processing: Built on the Llama2 architecture, Stable Beluga 2 excels in understanding and generating human-like text, thanks to its deep learning methodologies.

- Orca-Style Dataset Training: The model is finely tuned using an Orca-style dataset, which enhances its ability to follow complex instructions and produce coherent outputs.

- Dynamic Conversational Prompts: Users can interact with the model using a simple prompt structure, facilitating engaging conversations and creative exchanges.

- Safety and Ethical Considerations: Recognizing the challenges posed by AI technology, Stability AI has integrated measures to mitigate the risks of generating biased or inappropriate content, urging developers to conduct necessary safety testing before application deployment.

- High Efficiency: Optimized for performance, the model utilizes advanced training techniques like AdamW optimization and mixed-precision training to ensure efficient usage of computational resources.

What are the characteristics of StableBeluga2?

Stable Beluga 2 stands out due to its unique characteristics, including:

- Size and Scale: As a 70 billion parameter model, it possesses the capability to generate intricate and nuanced text outputs.

- User-Centric Design: The system prompt encourages the model to behave in an instructive manner, positioning it as an ideal assistant for users requiring assistance across various tasks.

- Innovative Training Procedure: Implementing state-of-the-art training methodologies ensures that the model learns effectively from diverse datasets, enhancing its versatility across different language tasks.

What are the use cases of StableBeluga2?

The diverse capabilities of Stable Beluga 2 open doors for numerous application scenarios, such as:

- Content Creation: Ideal for bloggers, marketers, and content writers looking for assistance in generating high-quality articles, blog posts, and marketing material.

- Educational Tools: Provides students and educators with a reliable tool for generating educational content, summaries, and even complex explanations of topics.

- Creative Writing Aid: Assists authors in brainstorming ideas, writing poetry, or refining prose through conversational engagement.

- Chatbot Development: Enhances user interaction in customer support systems or service platforms, providing instant responses that align with user queries.

- Research Assistance: Helps researchers generate summaries of academic papers, proposals, or project outlines quickly and accurately.

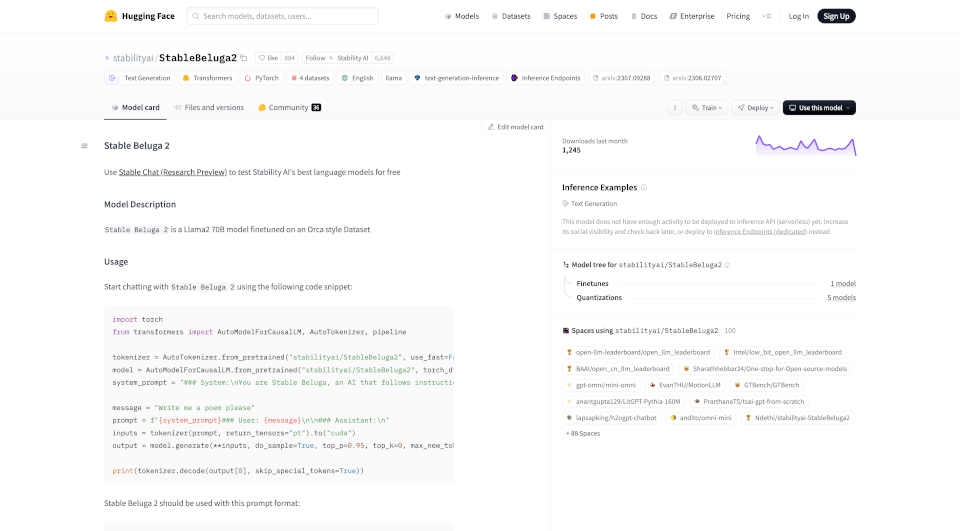

How to use StableBeluga2?

To interact with Stable Beluga 2, follow these simple steps:

- Import the necessary libraries and model using the provided code snippet.

- Define a system prompt that sets the context for interaction.

- Formulate your query or task as a user message.

- Pass both prompts to the model for it to generate a response.

- Decode and display the output to see the generated text.

Example Code Snippet:

import torch

from transformers import AutoModelForCausalLM, AutoTokenizer, pipeline

tokenizer = AutoTokenizer.from_pretrained("stabilityai/StableBeluga2", use_fast=False)

model = AutoModelForCausalLM.from_pretrained("stabilityai/StableBeluga2", torch_dtype=torch.float16, low_cpu_mem_usage=True, device_map="auto")

system_prompt = "### System:\nYou are Stable Beluga, an AI that follows instructions extremely well. Help as much as you can. Remember, be safe, and don't do anything illegal.\n\n"

message = "Write me a poem please"

prompt = f"{system_prompt}### User: {message}\n\n### Assistant:\n"

inputs = tokenizer(prompt, return_tensors="pt").to("cuda")

output = model.generate(**inputs, do_sample=True, top_p=0.95, top_k=0, max_new_tokens=256)

print(tokenizer.decode(output[0], skip_special_tokens=True))

StableBeluga2 Company Information:

Stability AI is a leader in the field of artificial intelligence, focusing on creating and promoting open-source technologies that empower developers and businesses globally. They aim to advance and democratize AI through open science principles.

StableBeluga2 Contact Email:

For inquiries regarding Stable Beluga 2, please contact: [email protected]