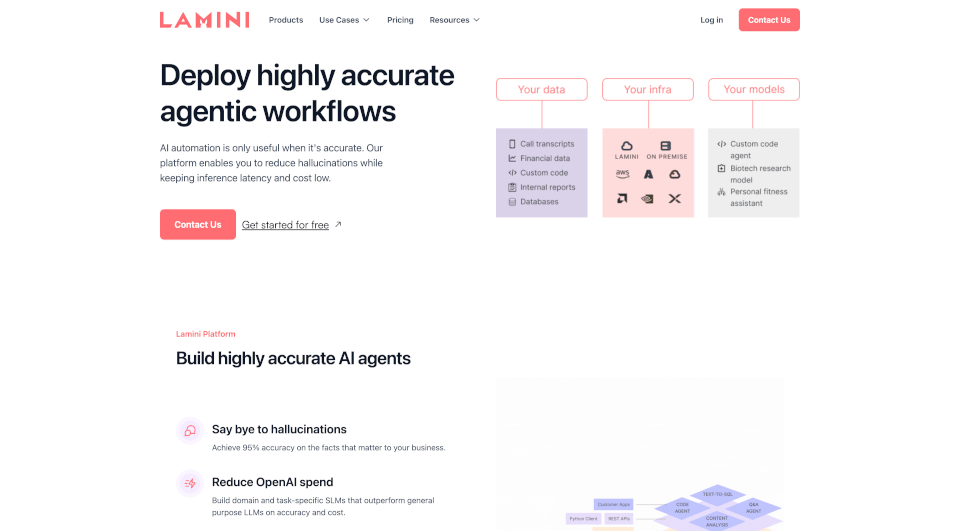

What is Lamini?

Lamini is an innovative enterprise LLM platform designed specifically for software teams tackling the complexities of developing and deploying their own large language models (LLMs). With an emphasis on improving performance, reducing hallucinations, and ensuring safety, Lamini empowers organizations to leverage billions of proprietary documents to create highly specialized LLMs. Whether installed on-premise or in the cloud, Lamini provides a secure and scalable environment, ensuring that companies can confidently manage their AI workloads. Thanks to a strategic partnership with AMD, Lamini is unique in its ability to efficiently run LLMs on AMD GPUs, making it indispensable for Fortune 500 enterprises and leading AI startups alike.

What are the features of Lamini?

Achieve Unmatched Accuracy with Memory Tuning

Lamini utilizes Memory Tuning to deliver the highest possible accuracy while minimizing inference latency and cost. This advanced feature allows users to achieve remarkable performance levels, significantly outpacing general-purpose models.

Diverse Use Cases

Lamini supports a wide range of applications including but not limited to:

- Text-to-SQL: Build accurate and efficient agents that can translate natural language queries into SQL commands.

- Classification: Automate manual classification tasks efficiently, making unstructured data actionable.

- Function Calling: Seamlessly integrate external tools and APIs to enhance productivity.

Enhanced RAG Memory

One of Lamini's standout offerings is its Memory RAG, a unique approach that simplifies the retrieval-augmented generation (RAG) process while significantly boosting accuracy. This feature reduces hallucinations, ensuring reliable outputs, making it ideal for applications that demand high fidelity.

Automated Classification

The Classifier feature of Lamini replaces manual data labeling with a scalable, accurate classification system. This capability allows for the classification of large unstructured data sets swiftly and effectively, cutting down on administrative overhead.

What are the characteristics of Lamini?

Lamini is characterized by its:

- High Accuracy: Achieve up to 95% accuracy on specialized tasks, minimizing errors and maximizing trust in AI outputs.

- Cost Efficiency: With a focus on reducing reliance on OpenAI's general-purpose model, users can fine-tune domain-specific LLMs that provide superior results at a lower cost.

- Security Compliance: Lamini can be deployed in secure environments, such as on-premises setups or through air-gapped systems, ensuring sensitive data remains protected.

What are the use cases of Lamini?

Lamini's flexibility allows it to be utilized in numerous scenarios including:

- Customer Support Automation: Scale customer service capabilities, freeing up human agents to tackle complex queries and build stronger customer relationships.

- Business Intelligence: Enable teams to conduct self-service business analysis by converting queries into actionable SQL commands, streamlining decision-making processes.

- Code Assistance: Providing tailored assistant capabilities for niche programming languages, helping developers navigate and understand unfamiliar code bases.

How to use Lamini?

To get started with Lamini, users should follow these general steps:

- Set up the Platform: Choose between on-premise or cloud installation based on your organization’s needs.

- Fine-Tune Your LLM: Utilize Lamini’s intuitive interface to fine-tune your model using proprietary data, optimizing for accuracy and performance.

- Implement Use Cases: Deploy the trained models into specific applications such as customer service automation, text-to-SQL, or industry-specific classification tasks.

- Monitor and Maintain: Regularly assess model performance and adapt as necessary to maintain high accuracy and relevance.