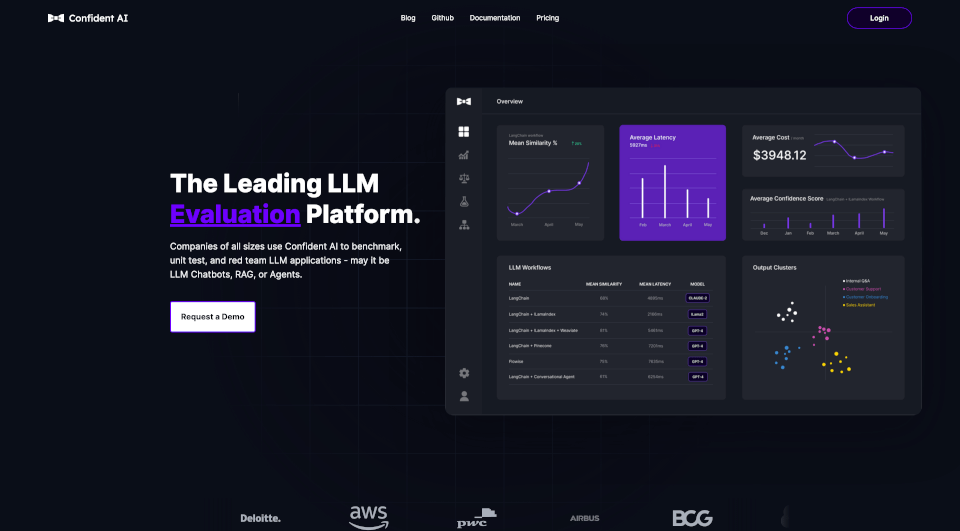

What is Confident AI?

Confident AI is the leading all-in-one LLM evaluation platform designed to benchmark and quantify the performance of large language model (LLM) applications. It enables businesses to seamlessly implement custom LLM metrics, offering a robust solution for companies of all sizes. With a focus on enhancing the performance of LLM Chatbots, Retrieval-Augmented Generation (RAG), and various agents, Confident AI empowers teams to assess their AI systems effectively and confidently deploy LLM solutions.

What are the features of Confident AI?

Automatic Regression Detection: Confident AI’s platform allows users to automatically catch regressions in LLM systems. With unit testing capabilities, users can compare test results, detect any performance drift, and identify the root causes of regressions. This ensures consistent and reliable performance of LLMs in different applications.

Research-Backed Evaluation Metrics with DeepEval: The evaluation metrics facilitated by DeepEval provide users with research-backed measures for evaluating their LLM systems. These metrics deliver accuracy and reliability comparable to human evaluation, covering a variety of LLM systems, including RAG, agents, and chatbots.

Advanced LLM Observability: Companies can easily perform A/B testing on different hyper-parameters, including prompt templates and model configurations. This real-time feedback enables users to monitor how their LLM systems perform under various configurations, ultimately leading to better decision-making and optimization.

Tailored Synthetic Dataset Generation: Confident AI allows for the generation of synthetic datasets that are tailored specifically for each customer’s LLM evaluation needs. These datasets can be designed in accordance with the client’s knowledge base and customized for various output formats, ensuring relevance and accuracy.

Automated LLM Red Teaming: The platform features automated red teaming capabilities, helping users identify safety risks in their LLM applications. By discovering the most effective combinations of hyperparameters, such as different LLMs and prompt templates, users can optimize their applications for safety and effectiveness.

What are the characteristics of Confident AI?

- User-Friendly APIs: Confident AI provides a user-friendly API that allows for seamless integration with LLM systems for evaluation and monitoring in the cloud.

- Monitoring and Reporting Dashboard: The platform includes a powerful dashboard for detailed reporting and analytics, helping users track performance and identify improvement areas over time.

- Ground Truth Definitions: Users can define ground truths to benchmark LLM outputs against expected results, facilitating better evaluations and pinpointing areas that require iteration.

- Diff Tracking: Advanced diff-tracking features help users make iterative improvements from adjusting prompt templates to selecting the right knowledge bases for their applications.

- Efficient Deployment: With 2.4 times less time taken to go from development to production, Confident AI enhances efficiency in deploying LLM solutions.

What are the use cases of Confident AI?

Confident AI is versatile and can be applied in several scenarios:

- Chatbots: Enhancing conversational AI through rigorous evaluation, optimizing responses, and ensuring relevance.

- Retrieval-Augmented Generation (RAG): Improving systems that leverage external knowledge sources to enrich responses and interactions.

- AI Agents: Optimizing AI-driven agents for specific tasks and operations through detailed performance analysis.

- Customer Support: Streamlining support operations through improved chatbot responses and reduced latency.

- Marketing Campaigns: Utilizing LLM technology to generate engaging and targeted content for campaigns.

How to use Confident AI?

To get started with Confident AI:

- Create an Account: Sign up on the Confident AI platform.

- Integrate with Your Tools: Connect your existing tools and platforms to leverage LLM's capabilities.

- Run Evaluations: Write and execute test cases in Python, utilizing the DeepEval framework for accurate evaluations.

from deepeval import confident_evaluate

test_case = LLMTestCase(input="...", actual_output="...")

confident_evaluate(experiment_name="RAG Test", test_cases=[test_case])

You can install the required package using:

pip install -U deepeval

Once the evaluations are set up, start running tests to monitor your LLM performance.