What is Berri?

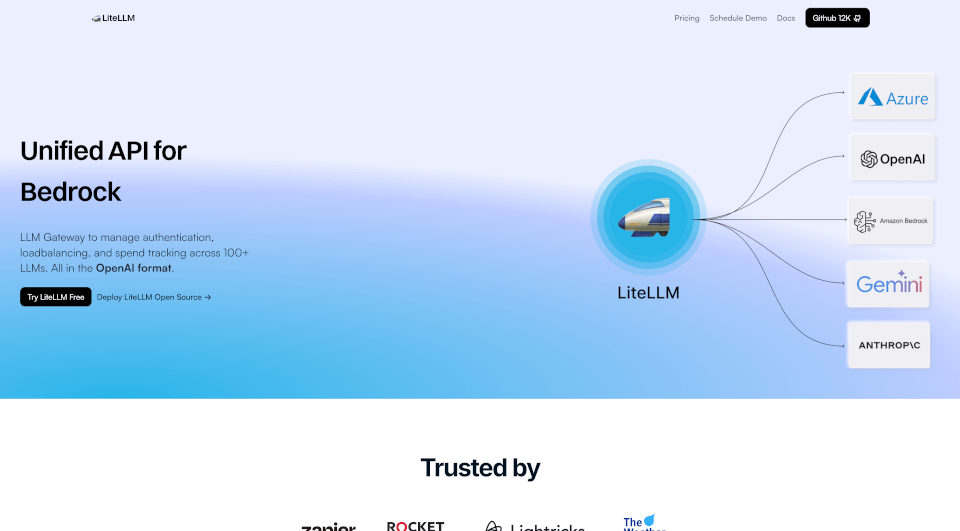

LiteLLM is an advanced solution designed to streamline the management of multiple Language Learning Models (LLMs) via a unified API. With capabilities that support load balancing, fallbacks, and spending tracking, LiteLLM boosts efficiency and offers seamless integration with over 100+ LLMs including prominent providers such as OpenAI, Azure OpenAI, Vertex AI, and Bedrock. Whether you're a developer, data scientist, or AI enthusiast, LiteLLM makes it easy to harness the power of cutting-edge LLM technology without the usual complexity.

What are the features of Berri?

- Unified API for Multiple Providers: LiteLLM aggregates various models into a single API interface, allowing developers to interact with multiple LLMs in a standardized way.

- Load Balancing: This feature optimizes request distribution across different LLMs to ensure even performance and avoid overload on specific models.

- Fallback Mechanisms: In the event of an API failure, LiteLLM automatically reroutes requests to alternative models, ensuring uninterrupted service.

- Spend Tracking: Manage and monitor your expenditure on LLMs with precision. LiteLLM offers tools to set budgets and track spending effectively.

- Virtual Keys and Budgets: Generate virtual keys for different teams or projects and allocate specific budgets to manage costs effectively.

- Monitoring and Logging: Continuous monitoring of requests and performance with integrations for Langfuse, Langsmith, and OpenTelemetry allows for thorough analytics and logging.

- Metrics and Authentication: Supports JWT authentication, single sign-on (SSO), and audit logging to enhance security and compliance.

- Community and Setup Support: With over 200 contributors and a thriving community, support for LiteLLM is readily available, making the setup process smooth and resourceful.

What are the characteristics of Berri?

LiteLLM stands out in the rapidly evolving AI landscape due to its robust architecture and user-centric design. It is characterized by:

- 100% Uptime Guarantee: With extensive infrastructure support, users can rely on uninterrupted access to LLM services.

- Open Source Flexibility: LiteLLM is available as an open-source solution, which means users can modify and host it according to their needs, promoting adaptability and innovation.

- High Demand Reliability: Over 90,000 pulls from Docker and more than 20 million requests served highlight LiteLLM's capability and trust within the community.

- Seamless Integration: By providing a simple yet powerful unified API, LiteLLM ensures your applications can easily connect to various LLMs without hassle.

What are the use cases of Berri?

LiteLLM is versatile and can be effectively utilized across multiple domains:

- Content Generation: Businesses can use LiteLLM to automatically generate written content, blogs, marketing materials, and product descriptions, reducing the time and effort involved.

- Conversational Agents: Implementing LiteLLM in chatbots or virtual assistants boosts their responsiveness and accuracy by pulling insights from various LLMs.

- Data Analysis: Researchers and analysts can leverage LiteLLM to synthesize vast amounts of data, enhancing decision-making capabilities through advanced analysis.

- Education and Learning Tools: Educators can create interactive learning experiences by integrating LLMs to provide instant feedback or personalized learning paths for students.

- Creative Applications: Artists and creators can use LiteLLM to generate new ideas, improve creativity, and explore artistic avenues in music, writing, and visual arts.

How to use Berri?

To get started with LiteLLM, follow these steps:

- Install LiteLLM: Download and install LiteLLM through the official repository or Docker.

- Create an Account: Sign up on the LiteLLM platform to gain access and obtain API keys.

- Configure Providers: Choose your preferred LLM providers and configure them through the LiteLLM dashboard.

- Set Up Load Balancing: Define your load balancing preferences to optimize request distribution.

- Implement API Calls: Use the LiteLLM Python SDK or direct API calls in your application code to start leveraging LLM capabilities.

- Monitor Usage: Track spending and usage through the provided analytics dashboard to ensure optimal performance and cost-effectiveness.